During the second half of 2022, Xbox took action against 10.19 million accounts, 40% more than in the previous six months. Punishments range from suspension to removal of the content. 80% of all executions during this period were carried out by proactive moderation, meaning action was taken before a player submitted a report. Microsoft says Community Sift, an automated tool that detects offensive content “within milliseconds” in text, video and images, evaluated 20 billion human interactions on Xbox.

Proactive moderation was especially aggressive in going after fake accounts, which are often those created by bots or automated. Of these types of accounts, the system took measures against 7.51 million, 74% of the total.

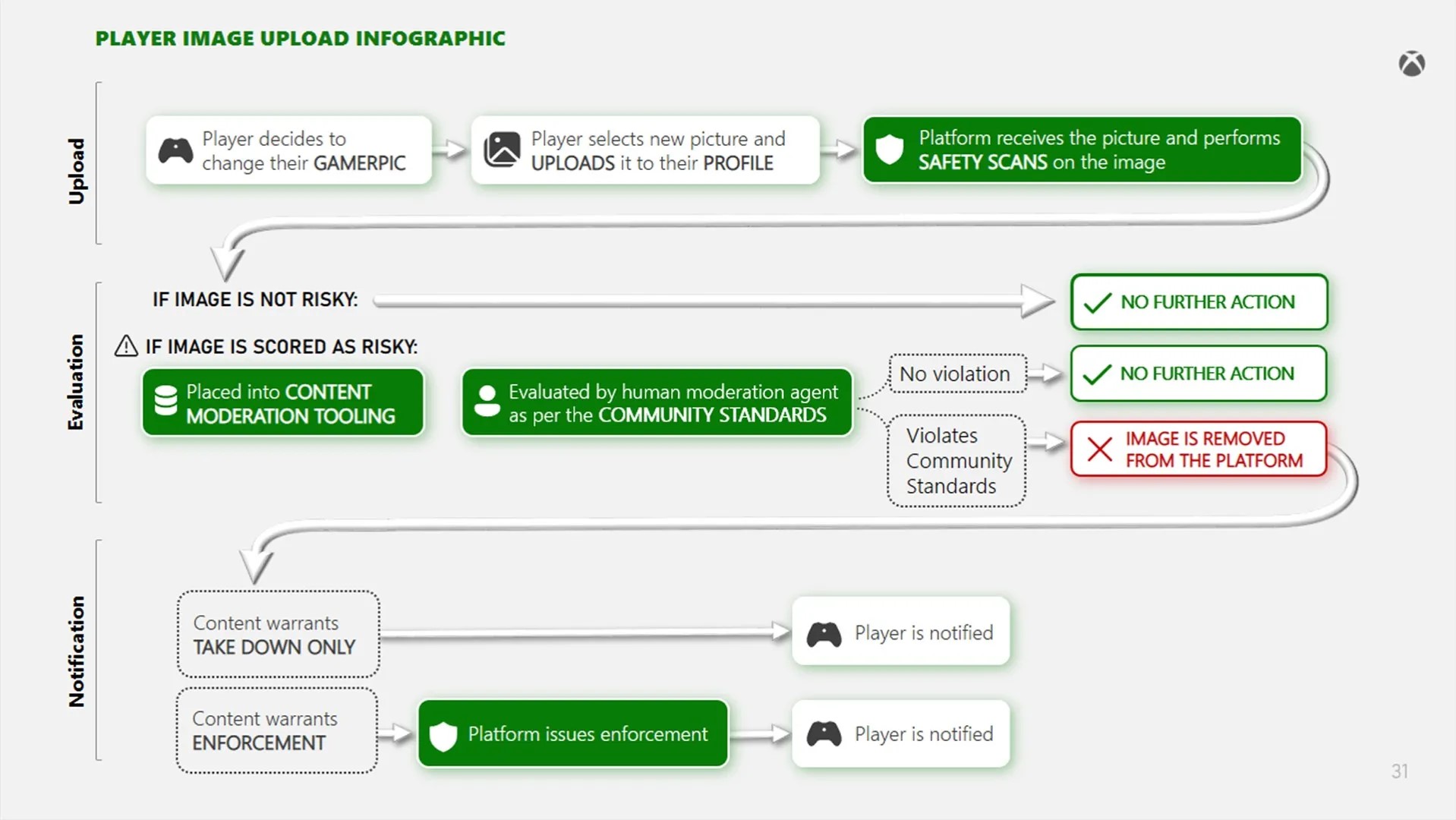

“During this latest period, we increased our definition of offensive content to include offensive gestures, sexualized content, and off-color humor,” Microsoft explains. “This type of content is generally considered offensive, which detracts from the gaming experience for many of our players. This policy change, along with improvements to our image classifiers, has resulted in a 450% increase in crackdowns on offensive content, with 90.2% proactive moderation. This moderation often results in the removal of the content.”

Although much of the moderation is proactive, players are also doing their part, submitting 24.47 million reports in the first half of 2022. 45% were about user-to-user communications, 41% about content, and 11% about user-generated content. Even as the Xbox player base goes up, the number of reports you submit goes down, possibly due to the work of proactive moderation.