Conversational artificial intelligence like ChatGPT is here to stay, and soon it will not only be in any search engine, but we will have it in a multitude of services.

Experts are already warning that this may end up favoring cybercriminals who can use this type of technology to try to defraud people more, especially taking into account a recent study where more than half of those surveyed did not know how to discern if a text was written by an artificial intelligence like ChatGPT or by a human being.

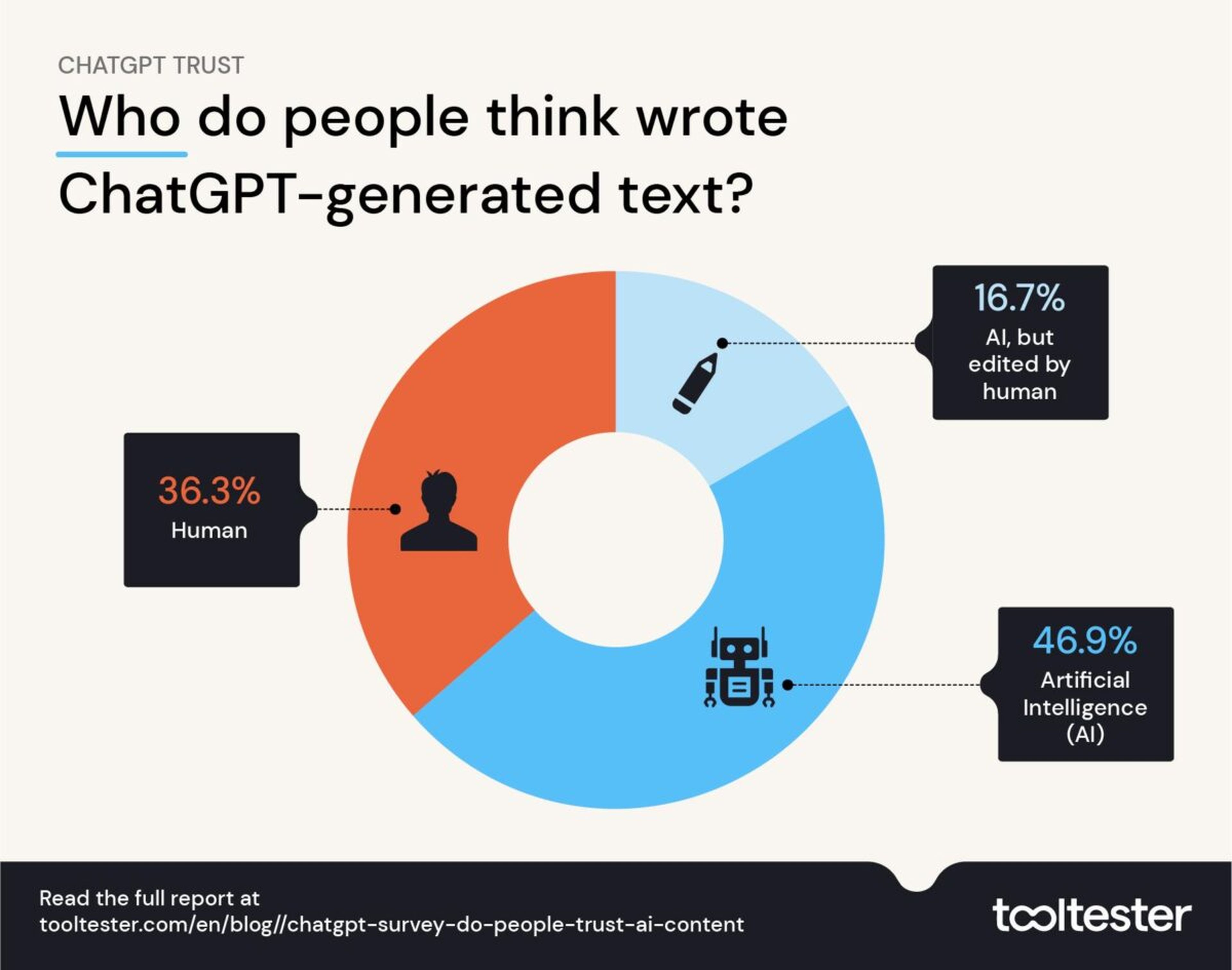

Researchers found that 53% of people could not tell the difference between content produced by a human, an artificial intelligence or a computer. artificial intelligencel Edited by a human.

It was especially worrying among young people between the ages of 18 and 24, where only 4 out of 10 could guess that a text was written by artificial intelligence.

They painted things better for those over 65, who are the ones who were able to detect to a greater extent that a text had been written by artificial intelligence.

“The fact that younger readers were less adept at identifying AI content surprised us”, points out Robert BrandlCEO and founder of tool review company tooltesterwho conducted the survey.

“It might suggest that older readers are more cynical about AI content these days, especially since it’s such a big topic in the news lately.”, he comments.

“Older readers will have a broader knowledge base to draw on and will be able to compare internal perceptions of how a question should be answered better than a younger person, simply because they have had many more years of exposure to such information.“, Explain.

Respondents also commented that people believe there should be warnings that artificial intelligence has been used to produce content.

Variations according to the themes of the generated texts

This research involved 1,900 Americans who were asked to determine whether a text was created by a human or artificial intelligence, in texts that spanned various fields including health and technology.

“The results appear to show that the general public may need to rely on AI disclosures online to learn what has and has not been AI-created, as people cannot differentiate between human and AI-generated content.“Brandl says.

“We found that readers would often assume that any text, whether human or AI, was generated by AI, which could reflect the cynical attitude people are taking towards online content right now.“, Add.

“This might not be such a terrible idea, since the technology of AI generative is far from perfect and may contain many inaccuracies, a cautious reader is less likely to blindly accept AI content as fact”, warns.

The researchers found that people’s ability to detect content generated by AI varied according to the type of content and information.

For example, AI-generated health content misled users the most with 56.1% incorrectly thinking the AI content was written or edited by a human.

When it comes to technology, it was the sector where people were able to detect AI-generated content most easily, with 51% correctly identifying AI-generated content.

![[Img #74683]](https://thelatestnews.world/wp-content/uploads/2024/12/The-main-mistakes-to-avoid-when-betting-on-electronic-sports-150x150.jpg)

![[Img #74683]](https://thelatestnews.world/wp-content/uploads/2024/12/The-main-mistakes-to-avoid-when-betting-on-electronic-sports-300x200.jpg)