The 7.31 million accounts for which some type of punitive measure was taken are divided between those that the system detected proactively, 4.78 million, and those that were reactive (based on reports submitted by players), 2 .53 million. 90.5% of the accounts on which the moderation system acted on its own initiative were false. These types of accounts are often automated and affect the player experience in a number of ways, including sending spam. They can also be from cheating users or accounts that are used to artificially increase the number of friends or followers.

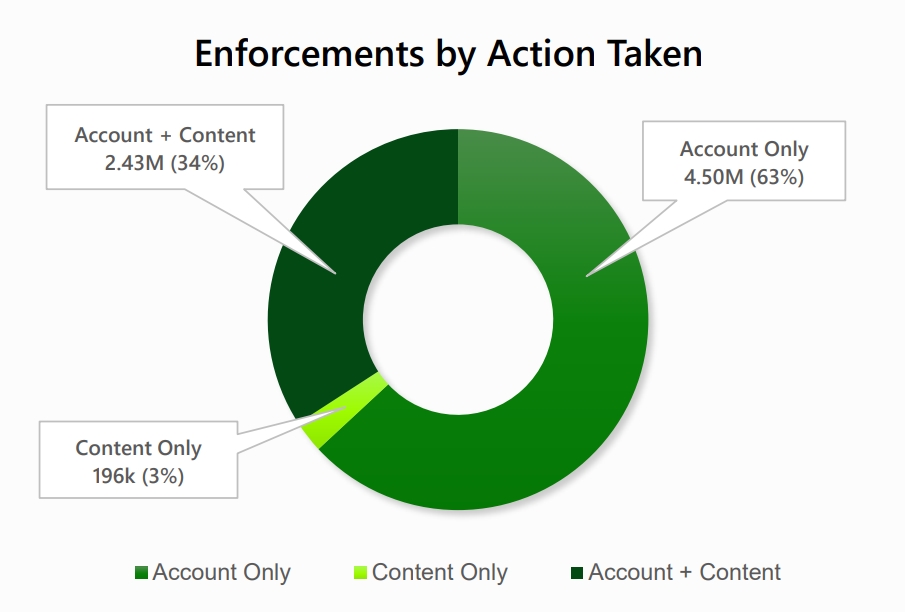

When a violation of the Xbox Community Guidelines is determined, three things can happen: content removal, account suspension, or a combination of both. Between January and June 2022, 4.5 million accounts (63%) were suspended, while on 2.43 million occasions (34%) the account was suspended and content was removed. In the remaining 3% of actions only content was removed. The report does not detail what type of punishment is applied, but ensures that “generally” it is a temporary suspension that prevents the player from using certain functions of the Xbox service.

The transparency report also shows that as proactive detection increases, player reports decrease. During the first half of 2021, users issued 52.02 million reports, compared to 33.08 million reports sent during the same period of 2022. For its part, proactive detection acted 533,000 times throughout the year. first half of 2021, while in the first half of 2022 his work multiplied by nine and he took action 4.78 million times.

Microsoft has committed to publishing the Xbox Transparency Report every six months.

![[Img #74664]](https://thelatestnews.world/wp-content/uploads/2024/12/James-Watson-The-controversial-genius-behind-the-double-helix-150x150.jpg)