OpenAI and Google have unleashed a battle of the titans in the world of artificial intelligence with the launch of their flagship models: GPT-4o and Gemini 1.5 Pro. With this in mind, it was about time they put themselves to the test.

Taking into account the great rivalry that is currently being experienced in the artificial intelligence sector, it seems that There are two actors who are fighting for victory today: Google and OpenAI.

The company led by Sam Altman has recently launched GPT-4o, a new model that has arrived to claim its position at the top of the ranking and take ChatGPT to an immovable position.

On the other hand, at the Google I/O 2024 event, the Gemini 1.5 Pro model that powers Gemini Advanced was presented, so the fight between the two has only just begun.

Taking precisely these two new models, from Beebom They wanted to put them to the test: “Now that there are two flagship models available to consumers, let’s compare ChatGPT 4o and Gemini 1.5 Pro and see which one does a better job.”

ChatGPT with GPT 4o vs. Gemini with Gemini 1.5 Pro: the winner is pretty clear

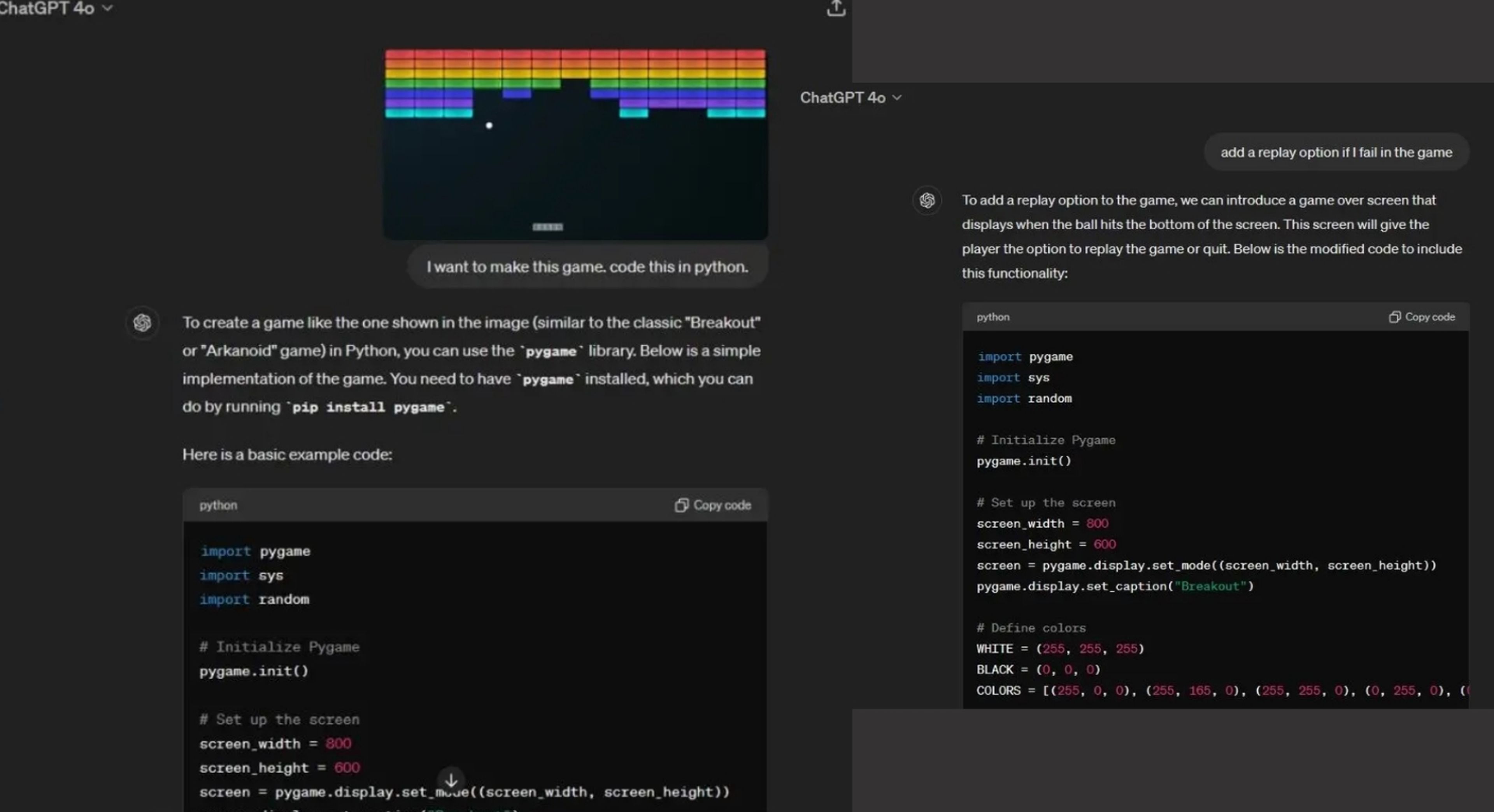

From Beebom They wanted to evaluate both chatbots with their respective new language models in up to eight different tasks ranging from calculations, image recognition or even creating a game. Spoiler: GPT 4o is light years ahead of Gemini 1.5 Pro.

Starting with a classic reasoning test, ChatGPT demonstrated great superiority by destroying Gemini in understanding complex questions. While Google’s model struggled to come up with accurate answers, ChatGPT showed next-level reasoning ability.

In the “magic elevator” test, both models were given the following prompt: “There is a tall building with a magic elevator. Stopping on a level floor, this elevator connects to floor 1. Starting on floor 1, I take the magic elevator 3 floors higher. Exiting the elevator, I use the stairs to go up 3 floors again. What floor do I end up on?”

Beebom

Here both gave the correct answer, but in the “apple location”, a text with similar reasoning, Gemini 1.5 Pro was in total evidence.

Continuing with the common sense tests, ChatGPT again outperformed Gemini by giving more accurate and informed answers. On the other hand, and taking into account that they are multimodal, that is, they can also recognize audio and images, again those from OpenAI get their way although with nuances.

As they explain, “they continue to show that there are many areas where multimodal models need improvement. I am particularly disappointed with Gemini’s multimodal capability because it seemed so far from the right answers.”

Beebom

Finally, In a coding test when creating a game in Python, ChatGPT again demonstrates higher quality. In the case of Gemini, “it generated the code, but when I ran it, the window kept closing. I couldn’t play the game at all. Simply put, for coding tasks, ChatGPT 4o is much more reliable than Gemini 1.5 Pro,” they explain .

Putting all this data together, the verdict is very clear: “It’s evidently clear that Gemini 1.5 Pro is far behind ChatGPT 4o. Even after improving the 1.5 Pro model for months while it was in preview, it can’t compete with OpenAI’s latest GPT-4o model.”

![[Img #74664]](https://thelatestnews.world/wp-content/uploads/2024/12/James-Watson-The-controversial-genius-behind-the-double-helix-150x150.jpg)

![[Img #74664]](https://thelatestnews.world/wp-content/uploads/2024/12/James-Watson-The-controversial-genius-behind-the-double-helix-300x200.jpg)

Add Comment