At first glance it seems impossible. Can we predict emerging phenomena? Could we encapsulate the act of the appearance of a phenomenon that until a few thousandths of seconds before, had no pattern, in a mathematical formula? We know that large-scale order can emerge spontaneously, and yet until now there was no single theory to explain it.

The maths they have the answer.

Software in the real world. A group of physicists, computer scientists and neuroscientists led by Fernando Rosas, a complex systems scientist from Sussex, have studied and developed a series of theoretical tools to identify when that emerging order occurs. The dogma: Think of emergence as a kind of “software in the natural world.”

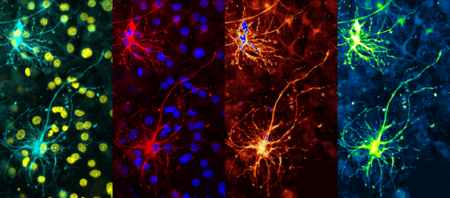

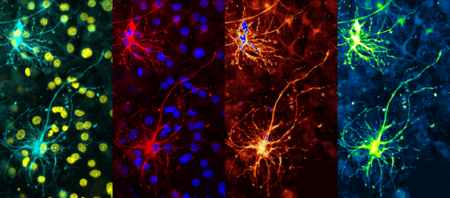

As? All complex systems exhibit this emergence, organizing themselves into a hierarchy of levels, each of which operates independently of the details of lower levels. Let us think, for example, of those rivers of pedestrians that end up making their way and following each other without a pre-established order or prior conscious choice, or of the explosion of millions of neurons that ends in the coherent and unique experience of understanding a text.

Researchers then resort to the analogy with computer software, which appears to be “closed” and does not depend on the detailed physics of the microelectronic hardware. Rosas explains that the brain also behaves this way: there is coherence in our behaviors, although neuronal activity is never identical under any circumstances. Seen this way, emerging phenomena are governed by macro-scale rules that seem autonomouswithout paying attention to what the (micro) parts that compose it do.

Emerging systems and conclusions. The work deciphered that there are three different types of conclusion involved in emergent systems. The first: “All the details below the macro scale are not useful in predicting… the macro.” In this case, it would correspond to a purely “informative” conclusion or closure. In other words, any lower level (micro) information is useless to predict the system.

The second tries to respond to the possibility of controlling the system, in addition to predicting it. The research answer: negative too. The interventions we make at the macro level do not become more reliable by attempting to alter trajectories at lower levels. In other words, if the lower level information does not add greater control of the macro-scale results, the latter is causally closed (it is the only one that causes its own future).

Computational mechanics. All this led them to the last conclusion about emerging systems: computational closure, a concept that is supported in computational mechanics and the ε- (epsilon) machine, a device that can exist in a finite set of states and can predict its own future state based on the current one. Said machine, explain at workwe can understand it as an elevator and its buttons to go up or down.

Epsilon would be an optimal way to represent how unspecified interactions between components “calculate” (or cause) the future state of the machine. In turn, computational mechanics allows the network of interactions between the components of a complex system to be reduced to the simplest description, called the causal state. Think about the analogy of our brains: they will never have exactly the same neuron firing pattern twice, but there are many circumstances in which we will still end up doing the same thing.

Machines as an answer. With the above concepts described, the researchers considered a generic complex system as a set of ε machines that work at different scales. Again, taking the analogy to our brain, one could represent all the ions on a molecular scale that produce currents in our neurons, another represents the activation patterns of the neurons themselves, and another represents the activity observed in brain compartments such as the hippocampus and the frontal cortex.

Then, although the system as a whole (our brain) evolves at all the levels described and the relationship between these ε-machines would be complicated, for an emergent system that is computationally “closed”, the machines at each level can be constructed by macro analysis of the components just at the lowest level: they are, in the researchers’ terminology, “strongly groupable.”

Leaks. In this described system there are also leaks. The team tested their ideas by observing what they reveal about a variety of emergent behaviors in some model systems (random walks between more and/or less dense streets of neighborhoods A and B, artificial neural networks of AIs or even that polychromatic chaos of the atmosphere of Jupiter that generated the immense vortex we call the Great Red Spot).

Their conclusion: the independence between the macro and the micro is not complete, there are leaks between levels. Rosas wonders if, for example, living organisms are actually optimized by allowing this partial “leaky” emergence, because in life, “sometimes it is essential that the macro pays attention to the details of the micro.”

A model to explain the causes. Finally, the study makes the case that the research could help resolve when complex systems can and cannot hope to develop predictive models at macro scales, although the framework also has implications for understanding more delicate questions of cause and effect in complex and emerging systems.

For Rozas, in an emerging system causality can operate at a higher level regardless of lower level details. However, the work, at least for now, seems to have no end. If the study comes out to say that nature itself is the source of the software that explains the emergent systems, who the hell wrote the software code that controls the emergence?

Image | James Cridland, deepak pal,Derek Bruff, Enricobagnoli

In Xataka | “Whoever doesn’t know mathematics is going to have a serious problem”: the importance of mathematical skills in the world of work

In Xataka | Science has been fighting against free will for centuries: how one of the great philosophical problems refuses to die

Add Comment